Bayesian Analysis

Bayesian approach becomes more and more popular because of the improvement of the mordern computing ability for machine learning and big data. Bayesian analysis is a completely different approach compared to frequentist approach. Yet, it’s more challenging. To be able to understand and learn the Bayesian approach, first, we will need to have the knowledge of conditional probability. Second, to have the knowledge of different distributions such as normal, bernoulli, binomial, gamma, beta, cauchy, possion, etc. Third, to be familiar with calculus. We need to calculate derivatives and integration of different distributions. Last but not at least, to be familiar with simulation sampling techniques such as Grid sampling, Variational Bayes and Monte Carlo Markov Chian (MCMC) including popular Gibbs sampling, Metropolis Hastings Sampling, Hamiltonian Monte Carlo (HMC),etc. Luckily, there are a few software tools Jags, Stan .etc can be used for Gibbs sampling and HMC.

The concept is simple. According to Bayes Theorem

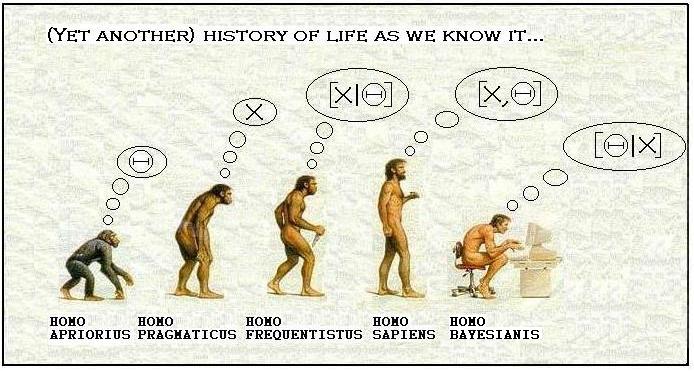

If friquentist and bayesian approach are just two different ways to look into things, Why and when is recommended to use Bayesian approach instead of frequentist approach?

Here are a few examples to use Bayesian approach over frequentist approach:

Clear prior information: the example in the book BD3 of Andrew Gelman’s in Chapter 1, problem 6. The prior information is that approximately 1/125 of all births are fraternal twins and 1/300 of births are identical twins.

Seperation problems. For example logistic regression couldn’t converge due to high dimension and small sample size.

Estimate multiple outcomes with credible interval. For example, family doctors try to diagnosis diseases (such as cold, flu) based on multiple symptoms (such as headache, sore throat, high temprature) and the probabilities of all symptoms sum up to limited possible diseases.

Small sample size with multiple experiments and limited budget.It’s a preferred meta analysis than tranditional meta analysis that has high heterogeneity with different recources since it provides a credible interval instead of confident interval.

Reference: